Mastering Digit Recognition in Under 100 Lines - A Concise Guide to Neural Networks with PyTorch and MNIST

Milind Soorya / July 12, 2024

5 min read

Introduction

Imagine a world where machines understand handwritten notes as easily as humans do, transforming scribbles into digital text in the blink of an eye. This isn’t a page from a sci-fi novel; it’s the reality crafted by neural networks! Today, let’s demystify one such marvel of machine learning — a feedforward neural network for digit recognition.

In this short tutorial I will explain the basics of an Image recognition Neural Network. It’s job it classifying the MNIST dataset.

Before continuing any further a huge thanks to Valerio Velardo — The Sound of AI yt channel for the PyTorch for Audio + Music Processing course. It is really amazing and I would recommend everyone to check out that series.

Code and Data

Please refere the code on my GitHub (Its under 100 lines) — 01 Training a Feed Forward Neural Network

Demystifying the Code

Our journey begins with a Python script using PyTorch, a leading library for building neural networks. The script is more than just lines of code; it’s a blueprint for understanding how machines learn from data.

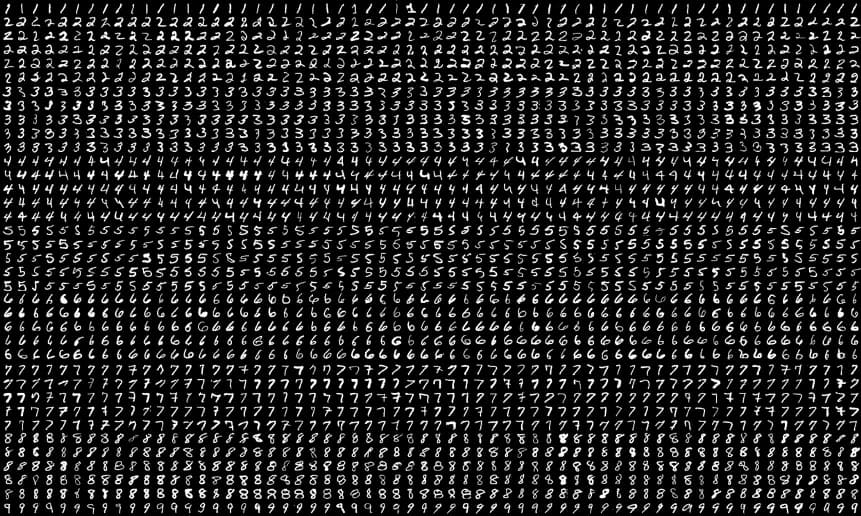

Downloading the Dataset: First, the script downloads the MNIST dataset, a collection of 70,000 handwritten digits. It’s like giving a child a book filled with numbers to practice before a math test.

Crafting the Model: Enter the FeedForwardNet, a series of mathematical functions layered to form a decision-making pathway. It's akin to a complex assembly line where each section has a specific task, turning raw pixel data into predictions.

Training Time: The heart of learning! Here, the network iteratively adjusts its parameters to reduce errors, much like a chef tasting and seasoning their dish until it’s just right.

Saving Wisdom: Finally, the trained model is saved, encapsulating all the learned patterns into a file, ready to predict new, unseen digits.

Practical Application

Why does digit recognition matter? Every time a post office sorts mail by reading zip codes or a bank processes checks, they’re using technology like this. It’s not just about numbers; it’s about making everyday tasks faster, more accurate, and efficient.

Deep Dive into the Network

Let’s take a closer look at the key components of our FeedForwardNet model and understand their significance in the process of digit recognition. Each component plays a specific role in transforming the input data (images of handwritten digits) into a predicted output (the recognized digit).

Flattening Layer:

When an image is input into the neural network, it’s typically in the form of a 2D array (if it’s grayscale) or a 3D array (if it’s colored), representing pixels. However, the neural network expects a 1D array as input. That’s where the flattening layer comes into play.

- Before Flattening: Imagine a 28x28 grid, each cell representing a pixel’s intensity in the image of a digit.

- After Flattening: The grid is transformed into a single long series of values (784 values for a 28x28 image), making it a format that the subsequent layers of the network can work with.

Flattening is like unrolling a rolled-up scroll of pixels into a long straight line.

Linear (Dense) Layers:

The linear layers, also known as dense layers, are the core building blocks of a neural network. Each layer consists of neurons, where each neuron in one layer is connected to all neurons in the next layer. These connections have associated weights and biases, which are adjusted during the training process.

- Function: They perform weighted sums of the inputs from the previous layer, add a bias, and decide whether to activate specific neurons based on this sum.

- Role in Digit Recognition: They are responsible for gradually abstracting and transforming the flattened pixel values into a form that the network can use to make a prediction.

ReLU (Rectified Linear Unit) Layer:

ReLU is a type of activation function that is applied to the output of the linear layers. It introduces non-linearity into the network, allowing it to learn complex patterns. If you would like to know more about ReLU and why the non-linearity is amazing read this short article -

Understanding ReLU — The power of non-linearity in Neural Networks

- Function: It transforms all negative values to 0 while keeping all positive values the same.

- Significance: This transformation allows the network to handle non-linear phenomena, which is crucial since real-world data, including images, is inherently non-linear.

Softmax Layer:

Finally, the softmax layer is typically used in the output layer of a classifier, where we want to interpret the outputs as probabilities.

- Function: It squishes the output values so that they all add up to 1, forming a valid probability distribution.

- Role in Digit Recognition: Each output corresponds to the network’s confidence that the input image belongs to a particular class (digits 0–9). The digit corresponding to the highest probability is taken as the prediction.

Enhancing the Code

What if you change the learning rate or add more layers? The script is a playground for experimentation. Try adjusting the parameters or architecture and observe how it impacts performance. Can you beat its current accuracy?

Conclusion

We’ve journeyed through the layers of a neural network, understanding its architecture, purpose, and potential. This script isn’t just a tool for digit recognition; it’s a stepping stone into the vast universe of machine learning. Dive in, tweak, experiment, and watch as the once mysterious neural network becomes a familiar friend. The world of artificial intelligence awaits your curiosity!